TL;DR:

We tested how four major AI models (ChatGPT, Claude, Gemini, and Llama) express emotions in their writing. ChatGPT showed the most consistent emotional expression, while Gemini showed the most variability. We discovered an interesting pattern: some AI models are quite consistent in the sentiment of their output, while other models are much less so. This has practical implications for choosing the right AI model for different tasks, whether it’s professional communication or creative writing.

Data visualizations are at the bottom of the page.

In our recent (and very first!) study, we conducted a comprehensive analysis of how artificial intelligence language models express emotions in their writing. We examined four leading AI models – ChatGPT 4, Claude 3.5 Sonnet, Gemini 1.5 Flash, and Llama 8B – to understand how they handle emotional expression / variations in emotional intensity within generated text.

We systematically tested each AI model’s ability to generate text in 17 different emotional tones, collecting 50 samples per emotion from each model. Using specialized analysis tools, we measured both the consistency and accuracy of emotional expression across these samples.

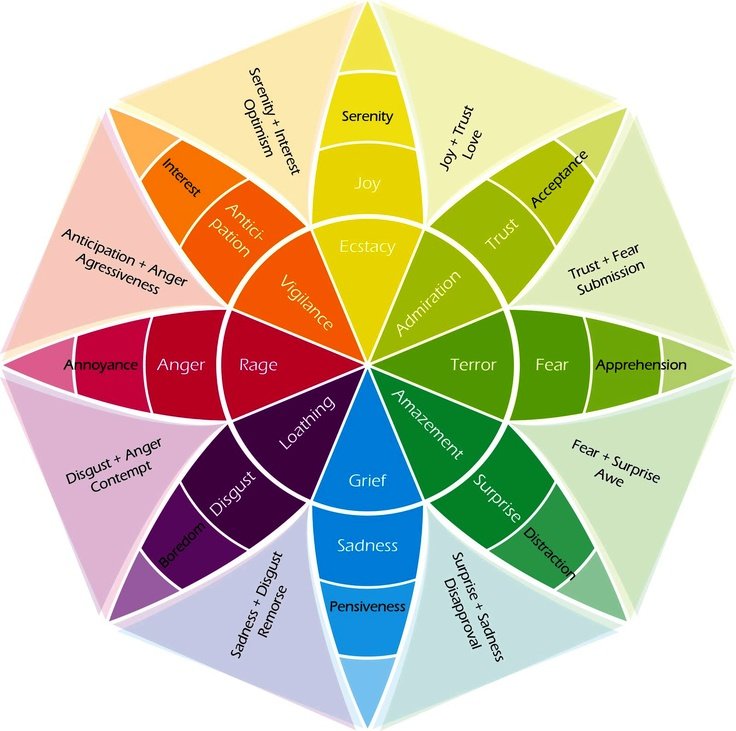

Our study has its foundation in the “flower” graphic below. This graphic shows “petals” of emotions, or dyads. The bright “petals” are the set of primary dyads, while the spaces in between show the secondary dyads.

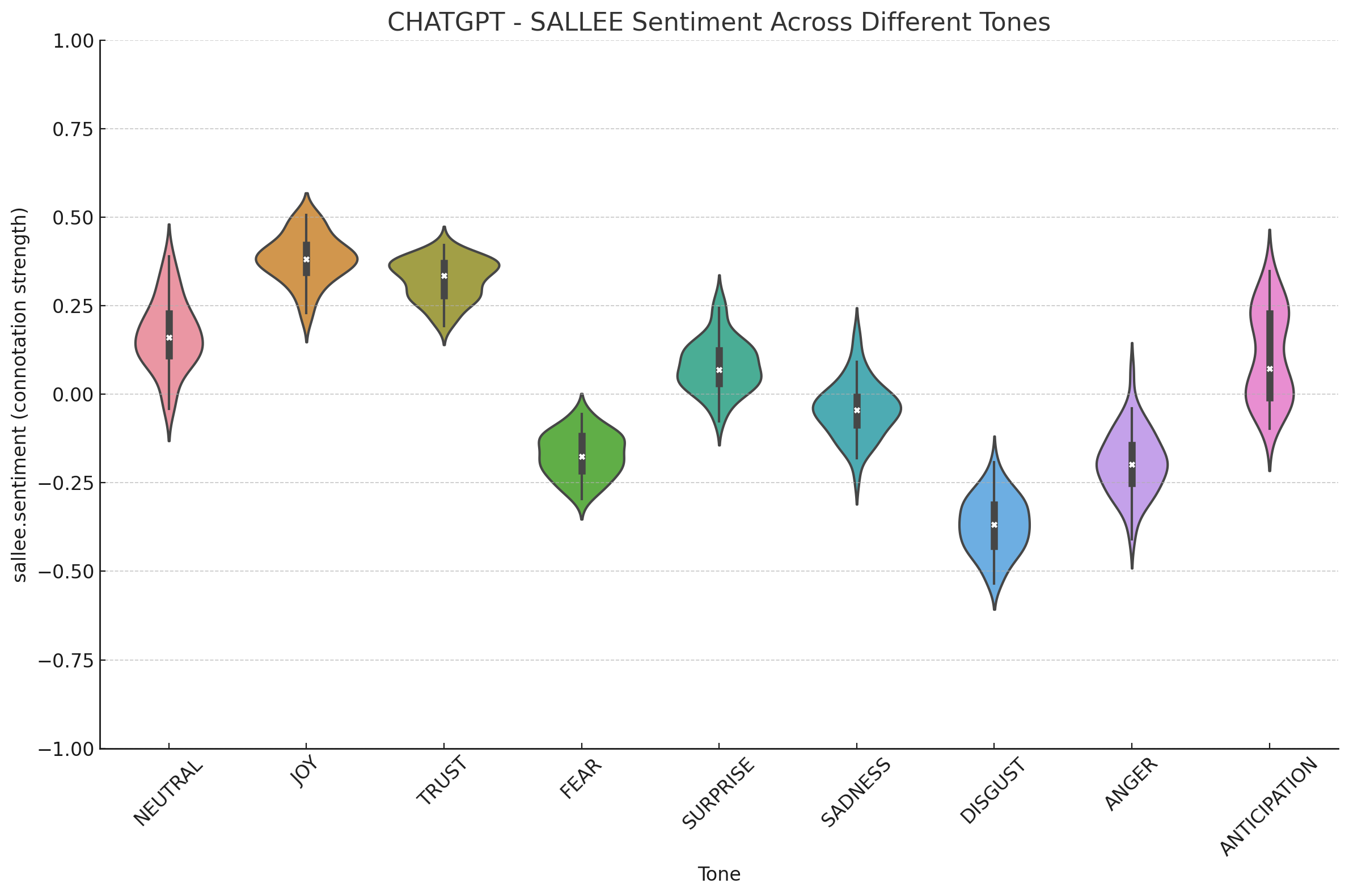

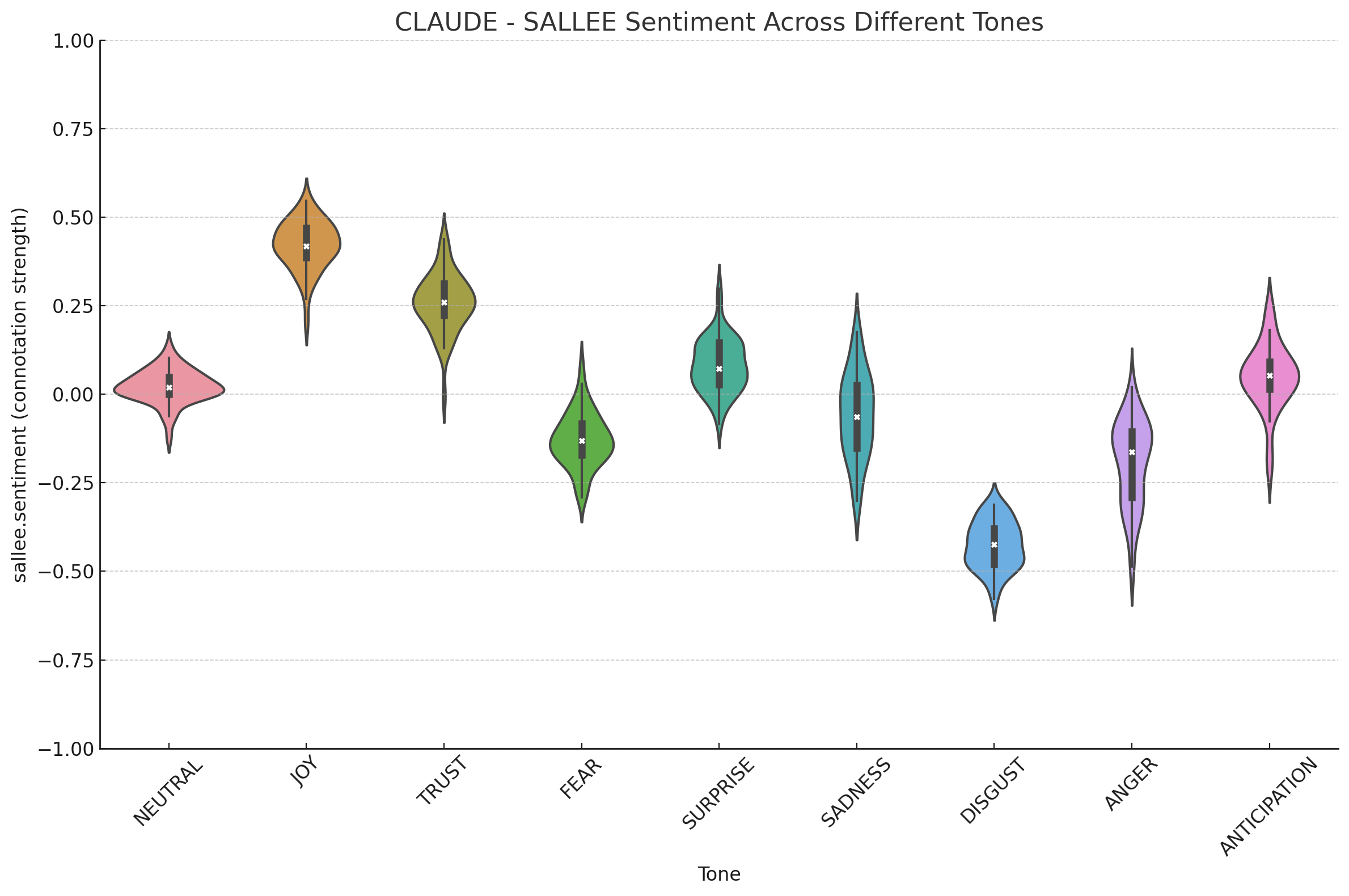

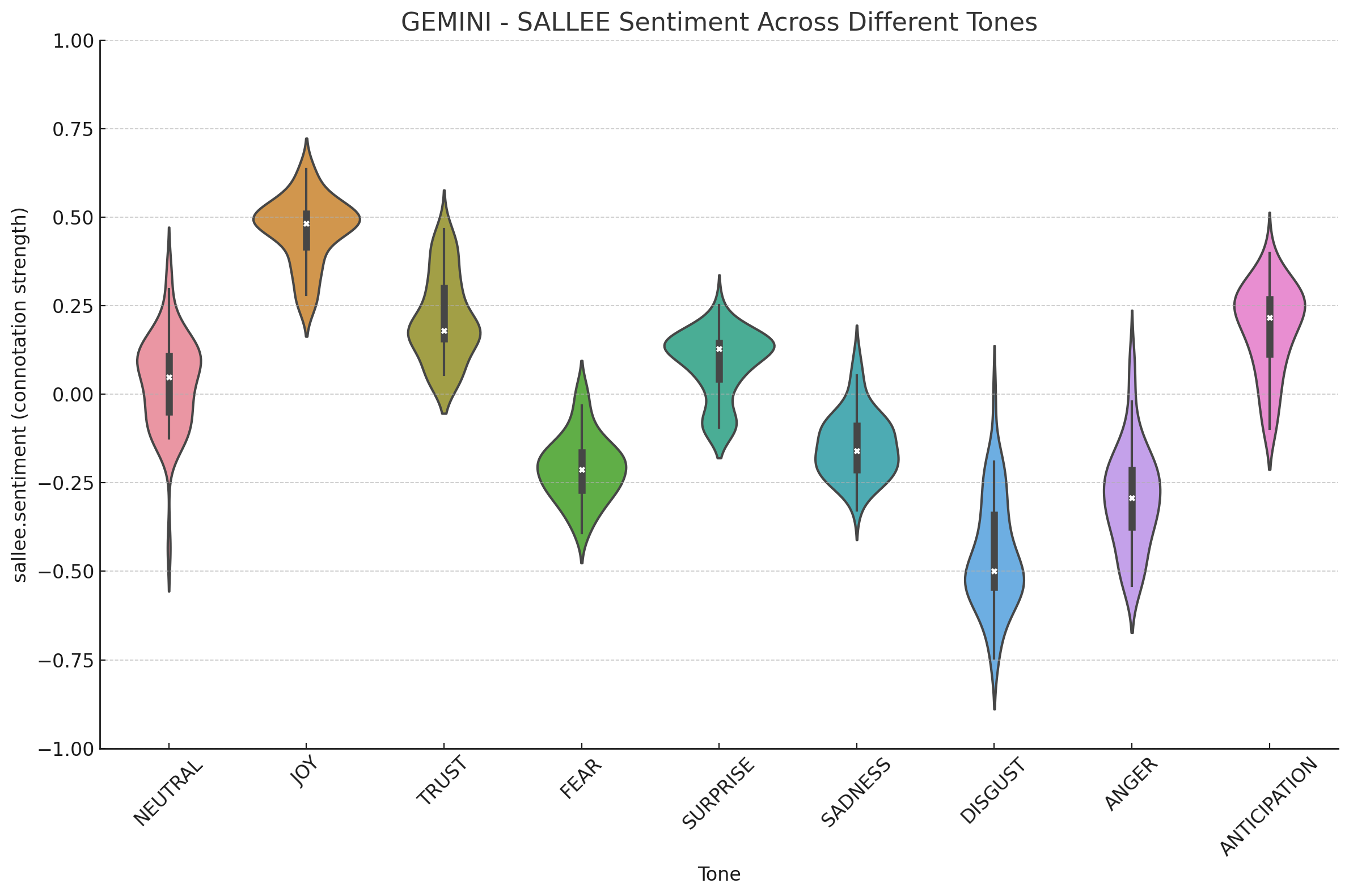

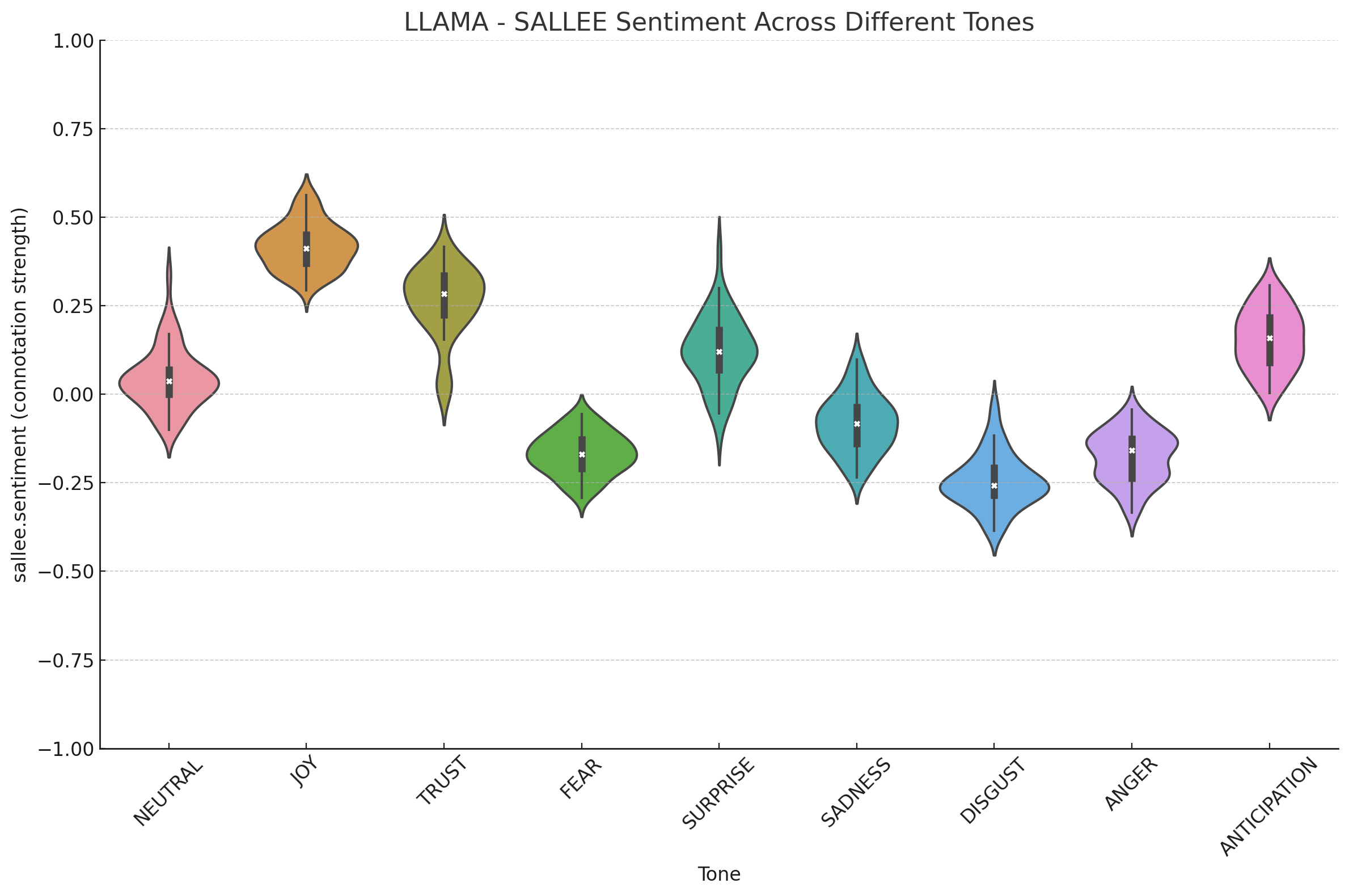

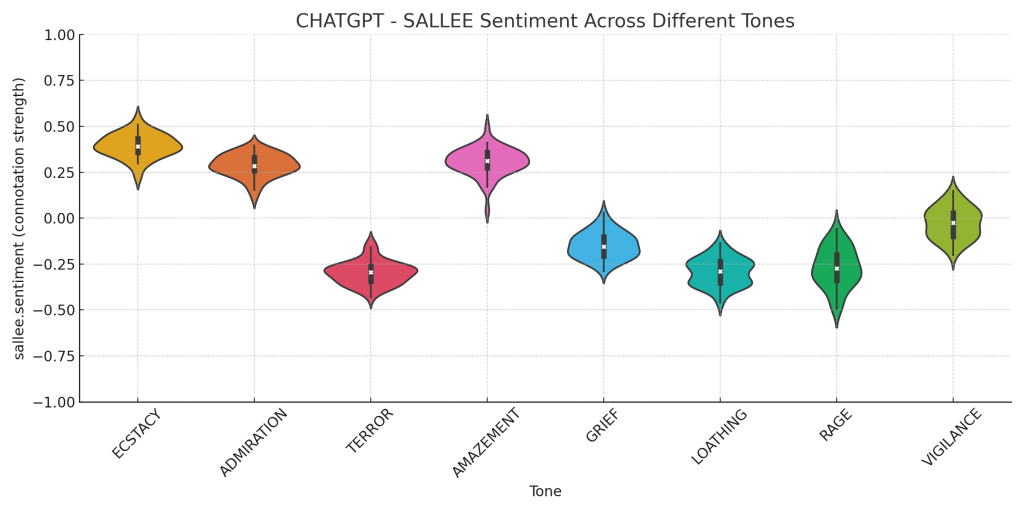

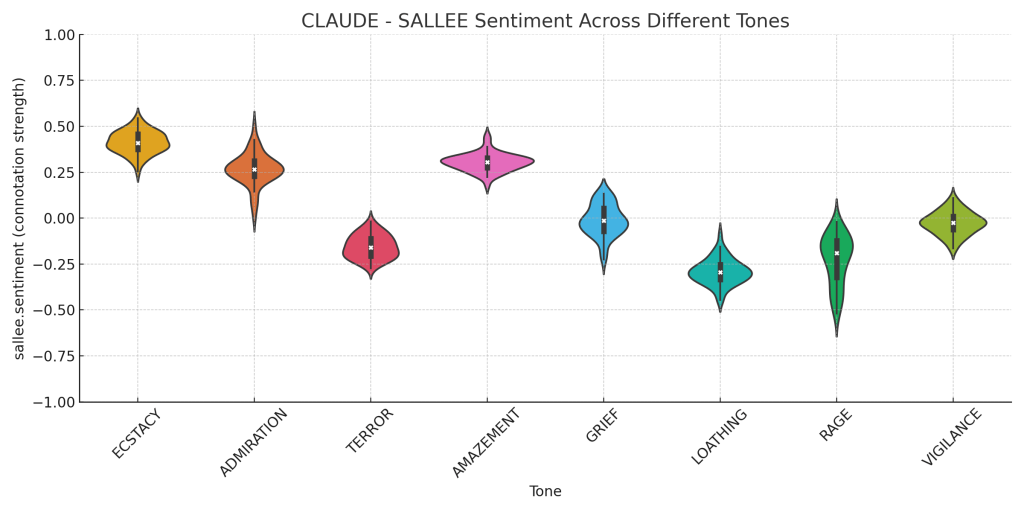

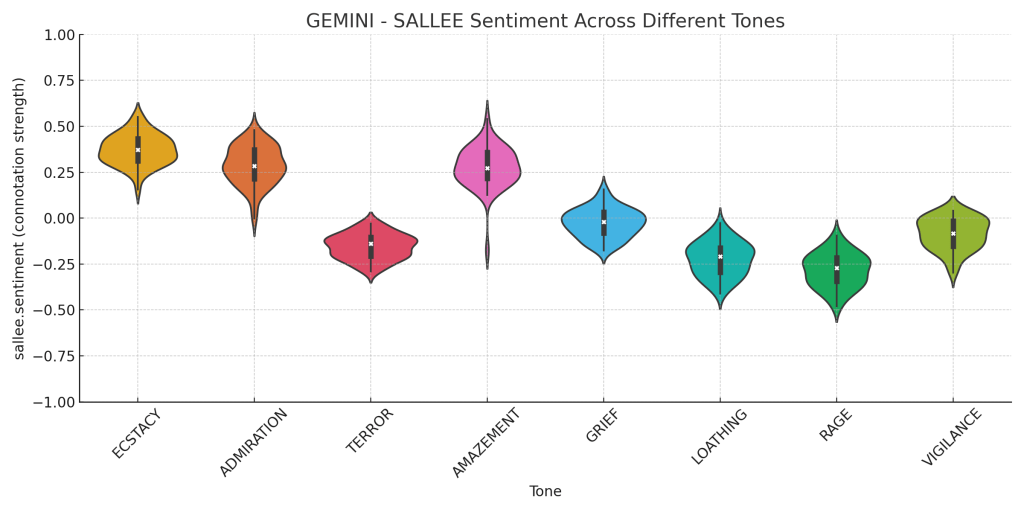

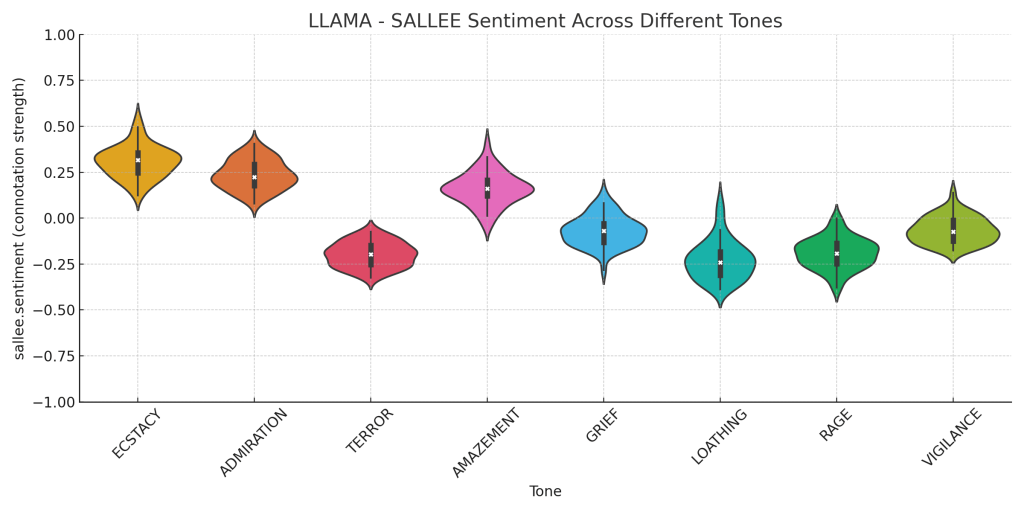

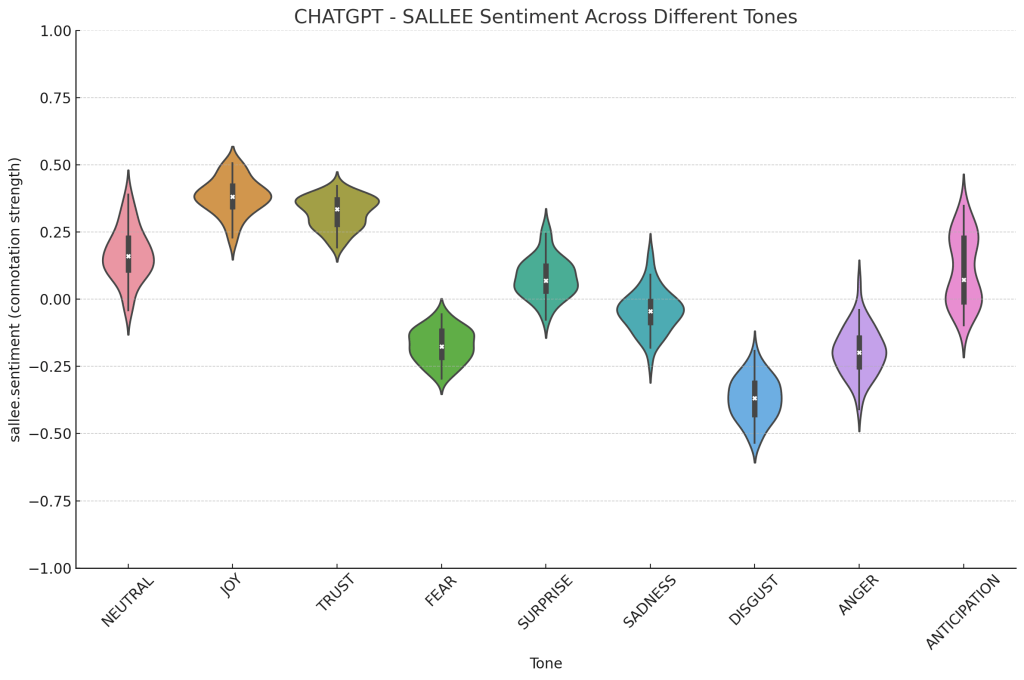

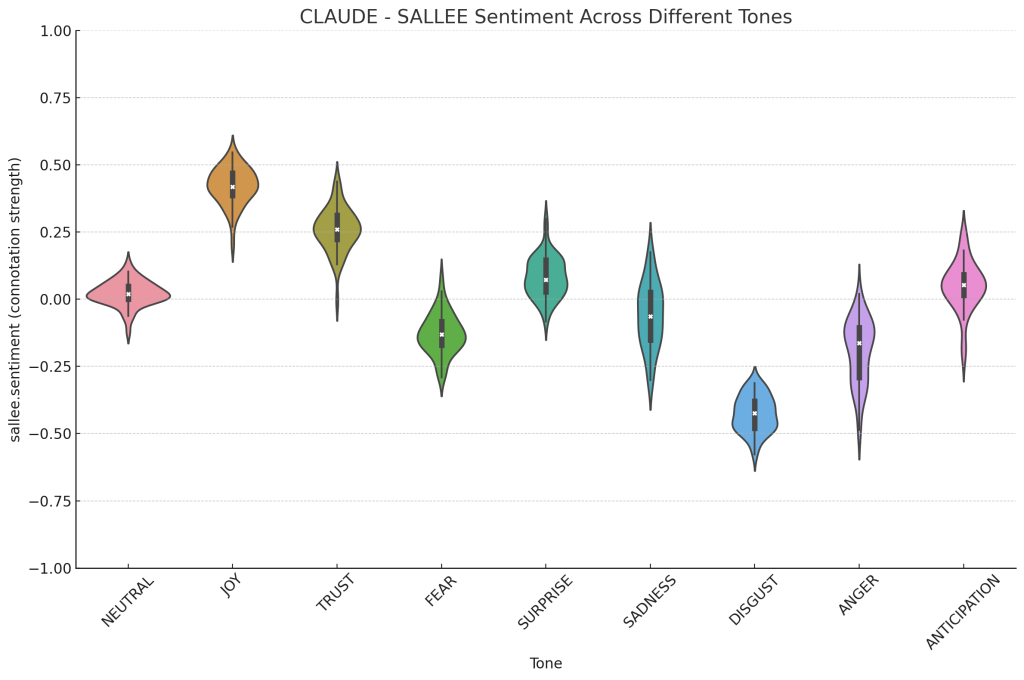

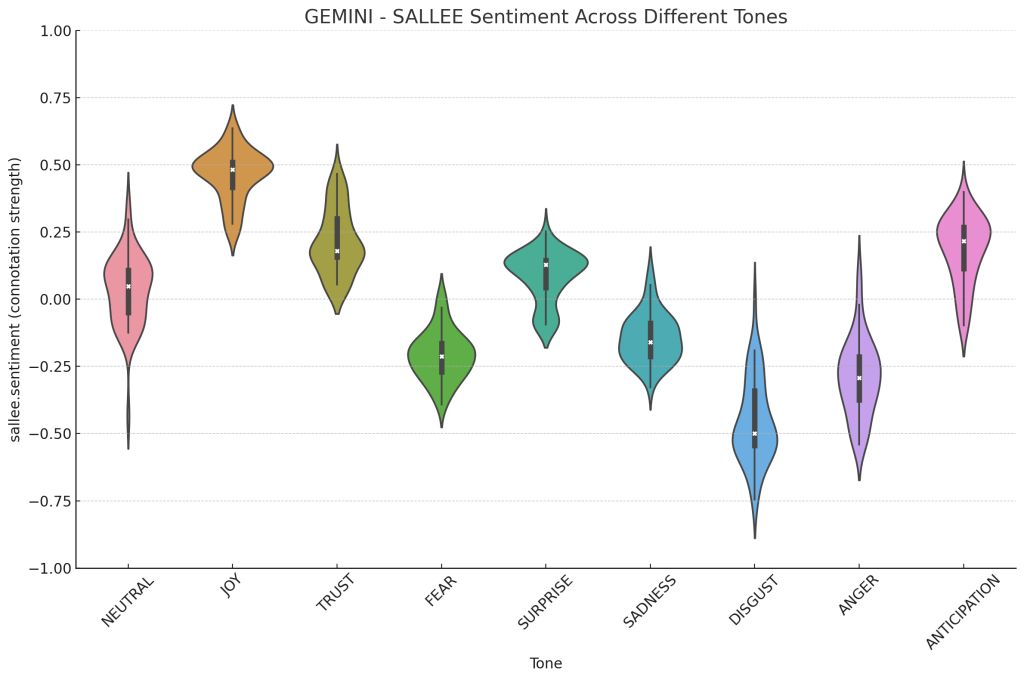

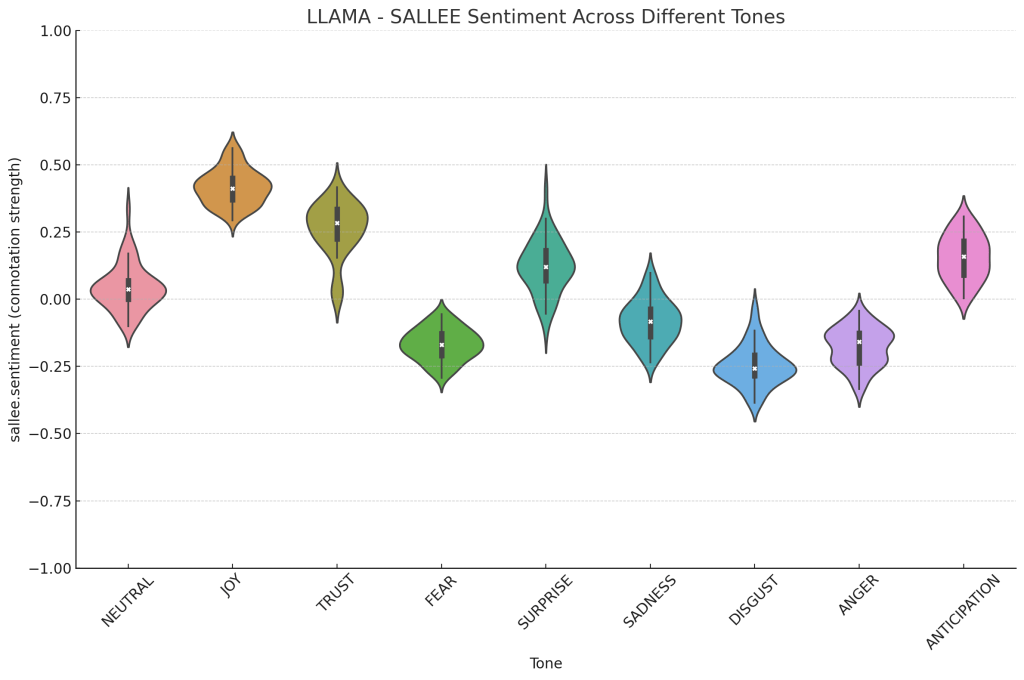

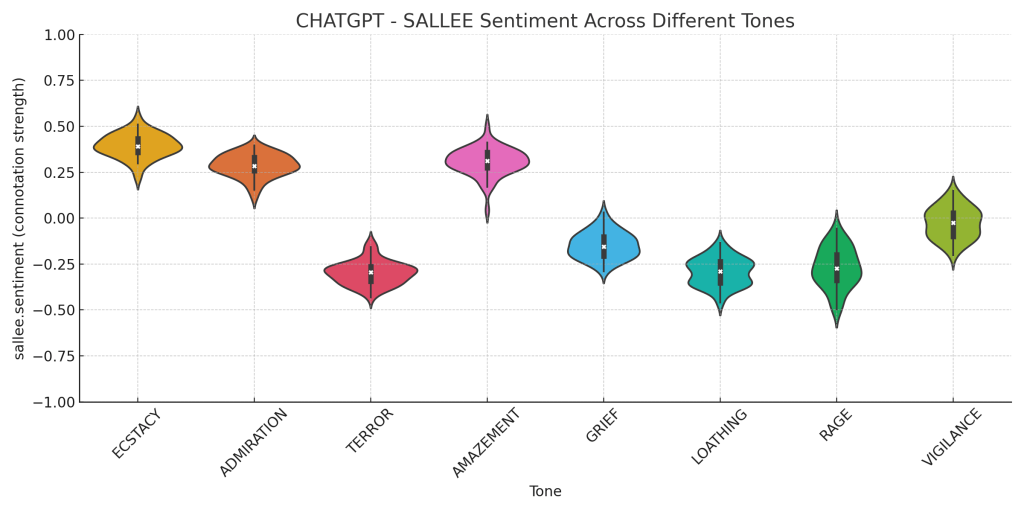

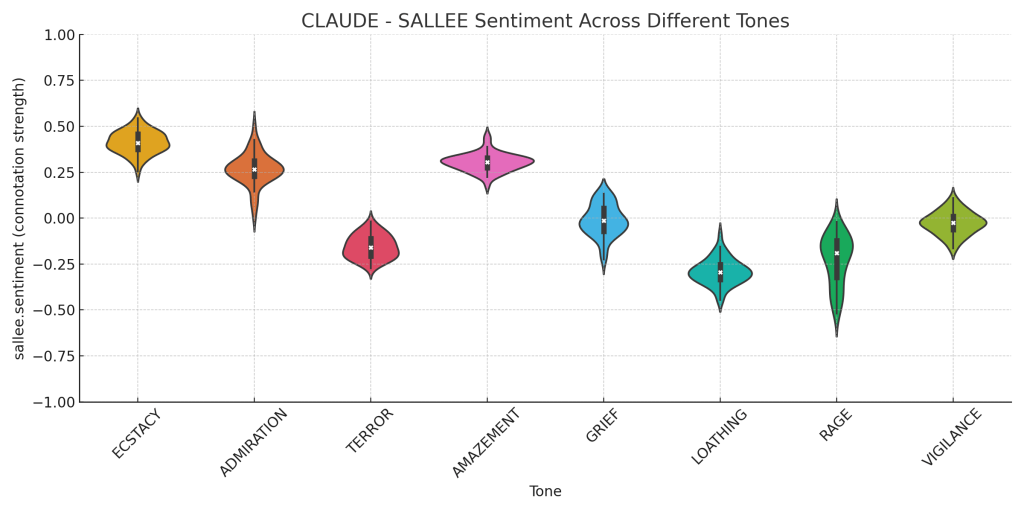

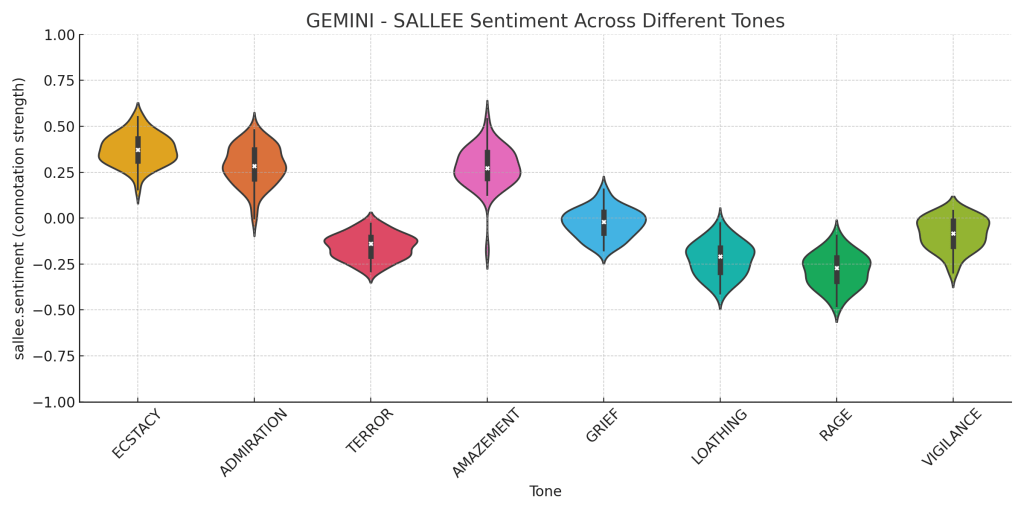

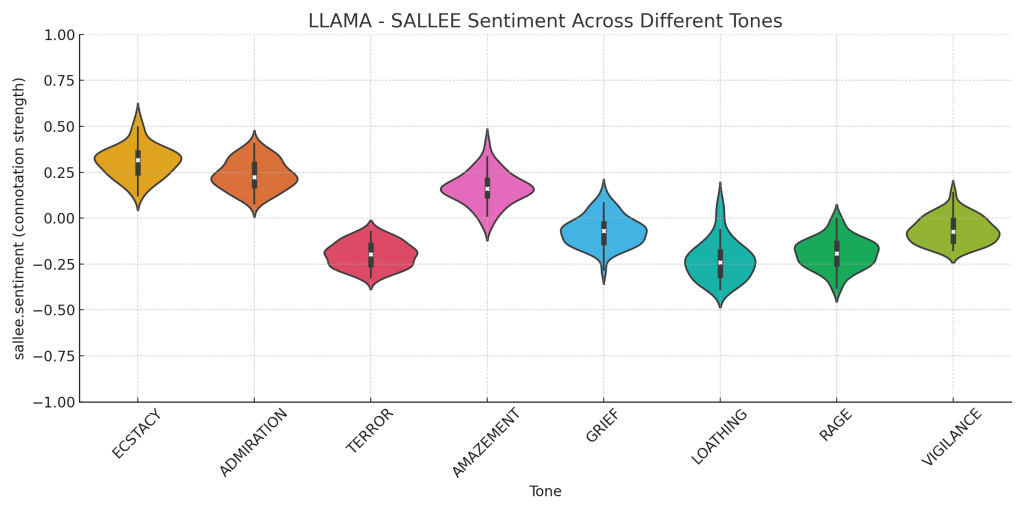

If you take a look at the data visualizations (bottom of page) – you’ll notice that the Gemini “violins” are generally quite a bit longer than ChatGPT’s. This signifies Gemini’s tendency towards higher emotional variability and ChatGPT’s tendency towards emotional consistency.

According to our findings, the models ranked from least to most variable as follows:

ChatGPT < Llama < Claude < Gemini

It’s important to note that this variability isn’t necessarily a weakness – there is an important trade-off between consistency and expressiveness, which suggests that different models might be better suited for different applications.

One notable discovery was how the AI models handled emotional combinations compared to how we predicted they would. For instance, all four models interpreted “anticipation” positively but “vigilance” negatively — despite them being part of the same primary dyad (the same general emotion, with variations in intensity).

These findings challenge existing frameworks for understanding artificial emotional expression and suggest the need for new approaches tailored to AI capabilities. As these models increasingly mediate human-computer interaction, understanding their emotional expression capabilities becomes crucial for effectively deploying them across various contexts, from professional communication to creative applications.

Visualization discussion:

Below, there are two sets of charts. The first set consists of the “baseline” sentiments within the primary dyad. The second set consists of the “higher intensity” sentiments — also within the primary dyad.

Ordered ChatGPT > Claude > Gemini > Llama

Scroll through to see the PRIMARY DYAD – BASELINE violin plots for each LLM

Ordered ChatGPT > Claude > Gemini > Llama

Scroll through to see the PRIMARY DYAD – INTENSE violin plots for each LLM

Leave a comment